Visual Phonics in Assistive Technology Application for Malaysian Sign Language

– Headed by Assoc. Prof. Dr. Azlina Ahmad.

In a typical classroom environment, deaf and hard hearing (D/HH) students need to switch attention between the teacher’s hands for the SL and the whiteboard, slides or flash cards for the written text. As a result, D/HH students miss important information which contributes to their problem in literacy. Therefore, the purpose of this research is to apply gesture recognition technology to support literacy learning using Visual Phonics (VP) reading strategy for D/HH students.

INTRODUCTION OF RESEARCH

Sign language is an important communication tool for the deaf community to communicate between them as well as with other communities who understand it. Sign language is a visual language comprising a gesture that combines shape of the hands, hand movements and face expression to represent words or sentences. Various sign language types have been developed such as American Sign Language (ASL), Australian Sign Language and European Sign Language. Malaysian Sign Language (BIM) was developed based on the American Sign Language in the 70s. BIM contains 75 percent signs in ASL aside from the various local signs designed and adopted by the deaf community in Malaysia.

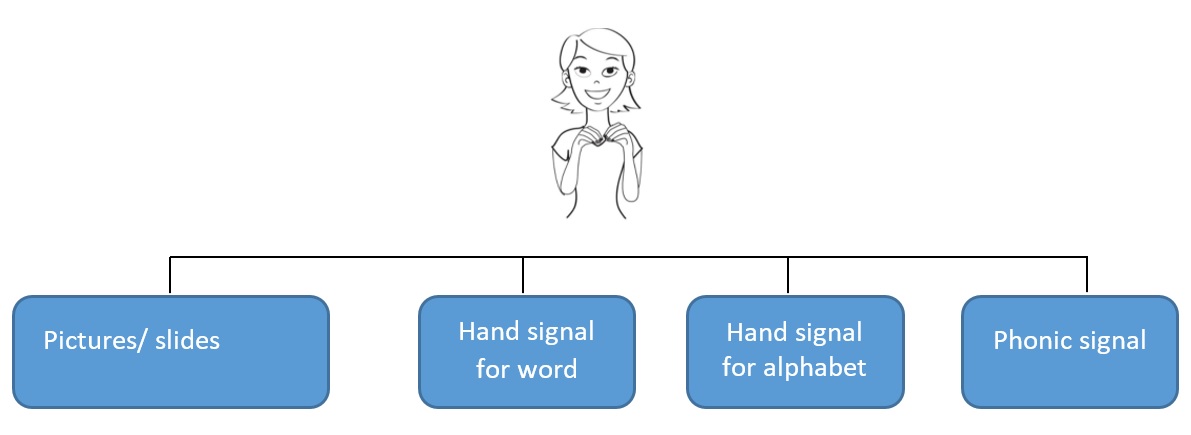

Learning for D&HH students post challenges to both parties, namely the teachers and students. Teachers need to deliver lesson content via presentation slides together with hand signals as shown in the figure below.

D&HH students were found to be more likely to focus on teachers who translate lesson content using sign language compared to viewing pictures or presentation slides. According to a recent study, D&HH students use 31% of their time looking at teacher’s slides and 64% of their time watching teacher’s hand gestures. Thus, students lose key information during teaching. As a result, most D&HH students complete their education program without being able to read well.

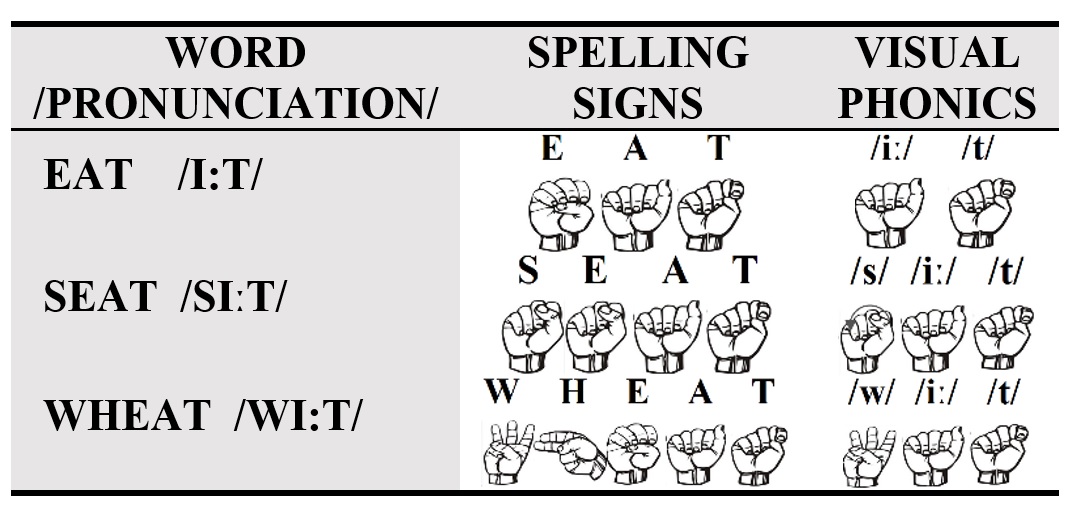

To help overcome the problems faced by D&HH students, we developed an application using Gesture Recognition technology which can detect movement of body parts such as different hand gestures in sign language through a camera. This application is designed using a Kinect sensor that allows D&HH students to interact with the application with minimal computer skills since it does not require input devices such as mouse or keyboard. The method used to assist D&HH students in reading skills in this application is using visual phonics. Visual phonics is a hand gesture method representing each alphabetical letter. It contains 45 hand signals representing 45 English-speaking sounds and written symbols that help students make connections between spoken and written languages. Each hand signals represents how the sound is generated. Each sound has a written symbol and each written symbol is a visual representation of the hand shape and represents the same sound regardless of the spelling. The following table shows some examples of hand signals using visual phonics.

FINDINGS OF RESEARCH

The application developed was tested for its effectiveness through an experiment involving D&HH students from three (3) schools. The results showed that D&HH students could easily interact with the proposed application. Additionally, students also showed significant achievements in reading and writing based on assessments conducted. This research contributes to the following: (i) Iterative and Incremental Educational Development (IIED) Development model (ii) Gesture recognition application for D/HH students based on school syllabus using visual phonics (iii) Instruments for testing and evaluation.